Artificial

Intelligence 3E

foundations of computational agents

13.1 Reinforcement Learning Problem

A reinforcement learning agent is characterized as follows:

-

•

The learning agent is given the possible states of the world and the set of actions it can carry out.

-

•

At each time the agent observes the state of the world (the environment and the agent) and the reward received.

-

•

After observing the state and reward, the agent carries out an action.

-

•

The goal of the agent is to maximize its discounted reward, for some discount factor .

Reinforcement learning can be formalized in terms of Markov decision processes (MDPs), in which the agent initially only knows the set of possible states and the set of possible actions. The dynamics, , and the reward function, , are not given to the agent. As in an MDP, after each action, the agent observes the state it is in and receives a reward.

Example 13.1.

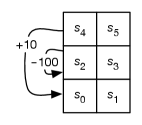

Consider the domain shown in Figure 13.1. There are six states the agent could be in, labeled . The agent has four actions: , , , . That is all the agent knows before it starts. It does not know how the states are configured, what the actions do, or how rewards are earned.

Figure 13.1 shows the configuration of the six states. Suppose the actions work as follows:

- right

-

The agent moves to the right in states ,, and , with a reward of 0 and stays still in the other states, with a reward of .

- left

-

The agent moves one state to the left in states , , and , with a reward of 0. In state , it stays in state and has a reward of . In state , it has a reward of and stays in state . In state , it receives a reward of and moves to state .

- upC

-

(for “up carefully”) The agent goes up, except in states and , where the agent crashes and stays still. It receives a reward of , except when it crashes, in which case there is a reward of .

- upR

-

(for “up risky”) With a probability of it acts like , except the reward is when it crashes, and 0 otherwise. With probability it acts as a , and with probability it acts as a .

There is a discounted reward with a discount of . This can be translated as having a 0.1 chance of the agent leaving the game at any step, or as a way to encode that the agent prefers immediate rewards over future rewards.

The agent should try to go left from as often as possible, to collect the reward of . Getting from to , it can go past a dangerous cliff at where there is a risk of falling off the cliff and getting a large negative reward, or going the longer and safer way around. Initially, it does not know this, but this is what it needs to learn.

Example 13.2.

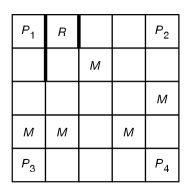

Figure 13.2 shows the domain of a more complex game. There are 25 grid locations the agent could be in. A prize could be on one of the corners, or there could be no prize. When the agent lands on a prize, it receives a reward of and the prize disappears. When there is no prize, for each time step there is a probability that a prize appears on any one of the corners. Monsters can appear at any time on one of the locations marked . The agent gets damaged if a monster appears on the square the agent is on. If the agent is already damaged, it receives a reward of . The agent can get repaired (so it is no longer damaged) by visiting the repair station marked .

In this example, the state consists of four components: , where is the -coordinate of the agent, is the -coordinate of the agent, is Boolean and is true when the agent is damaged, and is the position of the prize ( if there is no prize, if there is a prize at position ). Because the monsters are transient – knowing whether a monster appeared at a time does not provide any information about the future – it is not necessary to include them as part of the state. There are therefore states. The environment is fully observable, so the agent knows what state it is in. The agent does not know the meaning of the states; it doesn’t know about the four components and it has no idea initially about being damaged or what a prize is.

The agent has four actions: , , , and . These move the agent one step – usually one step in the direction indicated by the name, but sometimes in one of the other directions. If the agent crashes into an outside wall or one of the interior walls (the thick lines near the location ), it remains where is was and receives a reward of .

The agent does not know any of the story given here. It just knows there are 250 states and four actions, which state it is in at each time, and what reward was received at each time.

This game is simple, but it is surprisingly difficult to write a good controller for it. There are implementations available on the book’s website that you can play with and modify. Try to write a controller by hand for it; it is possible to write a controller that accumulates a reward of about 500 for each 1000 steps. This game is also difficult to learn, because visiting is seemingly useless until the agent eventually learns that being damaged is bad, and that visiting makes it not damaged. It must stumble on this while trying to collect the prizes. The states where there is no prize available do not last very long. Moreover, it has to learn this without being given the concept of damaged; all it knows, initially, is that there are 250 states and four actions.

Reinforcement learning is difficult for a number of reasons:

-

•

The credit assignment problem, or blame attribution problem, is the problem of determining which action was responsible for a reward or punishment. The action responsible may have occurred a long time before the reward was received. Moreover, not just a single action but rather a combination of actions carried out in the appropriate circumstances may be responsible for a reward. For example, you could teach an agent to play a game by rewarding it when it wins or loses; it must determine the brilliant moves, which usually occur long before the end, that were needed to win. As another example, you may try to train a dog by saying “bad dog” when you come home and find a mess. The dog has to determine, out of all of the actions it did, which of them were the actions that were responsible for the reprimand.

-

•

Even if the dynamics of the world does not change, the effect of an action of the agent depends on what the agent will do in the future. What may initially seem like a bad thing for the agent to do may end up being the best action because of what the agent does in the future. This is common among planning problems, but it is complicated in the reinforcement learning context because the agent does not know, a priori, the effects of its actions.

-

•

The explore–exploit dilemma: if an agent has worked out a good course of actions, should it continue to follow these actions (exploiting what it has determined) or should it explore to find better actions? An agent that never explores may act forever in a way that could have been much better if it had explored earlier. An agent that always explores will never use what it has learned. Exploration may lead to irreversible damage. This dilemma is discussed further in Section 13.5.